BunnyVision

Chapter 1

Purpose Driven Design

Purpose Driven Design

During the time afforded to me on this planet, I have developed a passion for software and deeply embedded computing technologies. Some might consider it to be an obsession, but I feel that is a matter of semantics. I have always enjoyed the “details”. Details matter. My view however is that deep technical acumen is a necessary, but insufficient condition to achieve success in a product design. The thinking that technical success translates into product success is a trap many can easily fall into. Connect your product with a purpose, and you win every time.

My personal path with embedded computing technologies began with Nintendo Entertainment System and Apple IIe computer. Those products were the motive force behind a deep dive into the 6502 microprocessor architecture in the 7th grade.

I had developed a drive to learn the details which pushed me. This force was complimentary to a pull that generated from sense of purpose. It wasn’t the pure technical detail that was the prime mover. Rather, a sense of aesthetic of what I could accomplish with the sum of the technological and design components.

At the time, videogames and the associated graphics technology were an absolute fascination. The mathematics of sprite rotation was mesmerizing. Being able to create new works that could be enjoyed by others (as well as myself) was a source of great purpose. I could work through the difficult details and learn what I needed along the way. Based upon my grades in school, no one would argue that I was among the best and brightest. I would argue that the pull from a sense of purpose could take me further than pure academic prowess.

I later developed an even deeper fascination for music, performance instruments and audio. I can still distinctly remember the smell of the demonstration room at the music store when I first laid eyes on the Ensoniq ASR-10 sampler.

The vacuum florescent display drew me in. The sleek black anodized brushed aluminum housing seemed like the hallmark of “professional”. The arrays of tactile push buttons were an invitation to experience a seemingly endless creative avarice. The technology buried inside the ASR-10 enabled another path of creative expression. The purposeful design aesthetic once again pulled me into learning about the underlying technology. I quickly a developed deep curiosity about what made the machine operate.

I did not end up owning an ASR-10 and had to settle with the lower cost SQ2. It was a story of (literal) life and death that I’ll have to tell another time. My tear down of the SQ2 revealed a Motorola 68000 microprocessor. I had also discovered that that the 68000 was also used the Sega Genesis. No person in the 1990’s could escape Sonic the Hedgehog and the Motorola 68000 in their daily life. Little did I know that Motorola (later to be named Freescale and now NXP) would become a big part of my design toolbox.

Video games and music eventually led to an equally significant fascination with electric guitar processing technology. It started with analog circuits and amplifiers, but it wasn’t long before I was pulled into Digital Signal Processing (DSP) technology. The embedded software/firmware element was a constant attraction.

I entered The Pennsylvania College of Technology with zero knowledge of z-transforms, IIR filters or anything of the sort. I barely graduated high school, near the bottom of the class. However, I knew what it felt like to have my face melted off by Leslie West (Mountain) in front a “wall” of Marshall amplifiers. I could see how music changed people in a live setting. It was almost like the brain could access a different dimension.

This purpose pulled me through an undergraduate education in electronics and graduate degree in Acoustics (The science of sound). Any struggle on the technical side was met with a the “pull” from what was on the “other side”.

The DSP56000 can be found in the early versions of the Line6 POD. It was one of the first devices that could model tube amplifiers that just so happened to be released when I was taking my 1st DSP course. Long before USB and easy firmware updates, Line6 would send updates to the music store via DIP EEPROMs and snail mail. My connection to Motorola (now NXP) is very much tied to the passions and purpose along my life path.

Quite a bit of the content I produced is spun towards applications with graphics (the Nintendo) and audio (synthesizer and guitar). However, purpose finds its way into many different aspects of life. The BunnyVision concept exists on an orthogonal, yet equally purposeful, axis that I recently discovered through the bitter-sweet combination of pain and recovery. Even though BunnyVision is a fundamentally different source of passion and purpose, I get to draw upon many of lessons learned in audio, acoustics, and graphics.

The Genesis of BunnyVision

2021 was a difficult year for many people. COVID19 had a significant effect on virtually all aspects of our daily lives. Many of the effects spilled into seemingly unrelated areas of daily life and work long after the initial onslaught of medical concerns. Everything from closing sales deals “remotely”, finding chips in the difficult supply chain, to forging strong relationships with team members. I found respite from the stresses of work, life, and global turmoil in a daily hike up a local trail.

The Mount Nittany Trail is a popular local hiking trail in State College, PA that happened to be 5 minutes from my office at the time. Every day at lunch time, I would spend roughly 60 minutes ascending and descending the trail. The main trail is short, but very steep and rocky in sections. Some refer to Pennsylvania as “Rocksylvania”.

At the time, my personal health was suffering. This daily routine became critical to both my physical and mental well-being. I had plenty of space to think. Whatever problems I had entering the trailhead, technical or otherwise, would soon evaporate. At least for a short while. The color change of the deciduous trees in the fall season provides an escape like no other. There is a period of four weeks that I live for. The cool and dry weather combined with the beautiful colors works wonders on mental health.

My newly discovered outlet soon came to a halt. I had become so reliant on the trail for my daily relief that I would traverse even in inclement weather. In early December of 2021, I took a terrible fall near the top of the trail.

My ankle was broken.

There was snow on the ground. Everything, including the copious amount rocks on the steep surfaces, were slippery. No one else was on the trail and my cellular phone dead. To add insult to injury, the left ankle happened to be my “good” one. I had broken my right ankle several times over the course of my life which lead to a difficult surgery and recovery.

The daily hike helped with many of the problems I was facing but was no panacea. I was carrying too much weight on my body. I had too much stress. Something needed to change. Laying in the snow, alone and in pain, brought forth a mental acuity that is hard to achieve in a normal day. No help was coming that day. I needed to get myself off the trail and no amount of self-pity would change that reality. I was overweight and there was no way I could support myself with the broken ankle. This time around, the ankle injury was bad.

Most of the dead limbs around me were useless. I crawled to a smaller, young tree that I pulled from the ground. It took about 2 hours to descend. Several sections were steep, rocky, and wet with snow, slowing progress and creating many opportunities to further injure myself. Some sections had to be done on my read-end and sliding inch by inch. Reaching my vehicle was a relief, albeit a painful one. Sitting in the captain’s seat of my dented Nissan never felt so good.

The first order of business was to get to the nearest medical center. With my “tree” in hand, I tried to traverse the parking to the nearest office that had an orthopedic specialist on call. It seemed like an even more difficult task than getting off the mountain (which happened to be in the distant view). A good Samaritan, who was a military vet, recognized my distress in the parking lot and got me a wheelchair.

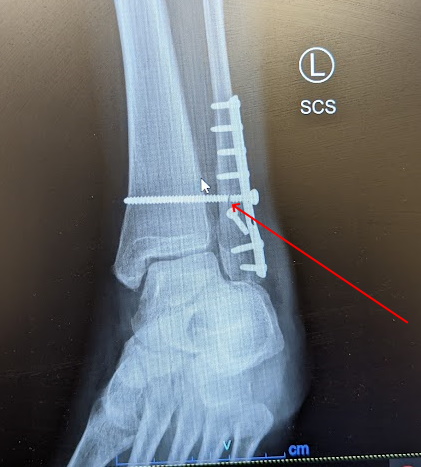

I ended up getting two opinions on the state of my ankle. The orthopedic surgeon who reviewed the X-ray was clear on the need to get into surgery in short order. My prior ankle surgery had to reveal an extreme sensitivity to anesthesia. I knew recovery would be difficult this time. There was a significant amount of metal and titanium screws added to stabilize the fibula and tibia.

Along from the typical difficulties of a recovery, I no longer had my hike to clear my mind. About 6 weeks into recovery, I hit another setback. A titanium screw which was holding the fibula and tibia in stable position broke.

This discomfort was greater in magnitude than what I experienced the day I had to get off the trail. After another surgery and another 8 weeks, normal life started to feel within reach. I was in “the boot” and permitted to walk on the ankle and move about. I was prescribed physical therapy to regain mobility in the ankle.

This is where the new passion and purpose was born.

After a few sessions of PT, I quickly realized the hour in the fluorescent lit medical office was the antithesis of what I needed. Not to say the simple exercises weren’t important, but the setting and context felt wrong. It didn’t feel like I was in control of my health and recovery. I was a reactive participant.

One positive aspect to the hike was the nature of the exercise itself. If you hike 2km up the mountain, you must return. There is no quitting early. The total return on investment is huge. Both mental and physical. However, the hike was not an option. It was late spring, and I knew I needed to be outside and be in the driver’s seat. It wasn’t longer before I found myself in the local bike shop.

They saw me in the “boot” and offered a prescription to what would become the key to my recovery, and shift in how I would approach life. This shop sells high quality, serviceable machines. The price tags were much higher than what I would have previously considered. However, they observed my predicament and educated me on the state of bike technology. In particular, electric bikes (e-bike) with a mid-mounted motor.

A mid-mounted, or mid-drive, motor generates an assistive torque which is translated through the bike’s gear train. These machines are more costly but are in a different class than e-bikes with a hub motor integrated into the rear wheel. There is no “throttle”. Your legs are still the prime motivator.

A mid-mounted motor can provide assist. This means that the motor can be significantly smaller than a hub motor design as you get the mechanical advantage of the gearing. The overall mass of the machine is significantly less than a hub motor design. To the point where the bike is perfectly usable with the motor turned off or battery discharged. It wasn’t long before I was walking out the door with a Specialized Turbo Como 4.0 650b.

The availability of assistance was extremely helpful. I still had pain in my ankle. There were days I did not want to ride. The extra help was enough of a bump to make any day a good day to ride. While it is true that the assistant removes some of the effort, many riders find themselves spending significantly more time on the bike. This effect has been studied, revealing that e-bike ownership can lead to significant positive outcomes because you use it more often and for a longer period of time.

My normal commute via automobile to my desk could be up to 20 minutes on a busy street. The bike was 30 minutes through a network of paths and streets. This new commute was a wonderful replacement for my hikes. I got all the same benefits as well as the utility of getting to my office. It wasn’t long before I found myself taking 2-, 3- or 4-hour journeys on evenings and weekends. The assist level came down, meaning the battery life would be significantly extended and I got more exercise. I also found myself dropping significant weight while having time for audio books and mental health breaks.

This newly found passion and purpose was a relief. Within 5 seconds of starting a ride, I feel the positive effects. The forward velocity and feeling of control over the machine are totally awesome. It is the same feeling of passion and purpose as the with guitar, just a on a different axis.

It wasn’t long before I discovered a practical application of technology to my bike ride. There is no shortage of biking accidents involving cars. My personal experience was that busy city streets could be as dangerous as a “back road”. In some cases, the rare vehicle on a back country road can come from behind when you aren’t expecting it. I have had several close calls.

There are some existing technological solutions on the market. As an example, there is a radar sensor which detects an approaching vehicle. I quickly found that that this technology had a relatively high error rate in certain scenarios. The system of audible feedback was annoying and easy to tune out.

What I wanted was a bit more information about what was behind me for context. The human brain can do quite a bit with a small amount of information. I felt that low resolution imagery with better feedback presented in terse, non-distracting manner would be an ideal solution for my needs. I also want to have an idea of what is coming behind me. Another cyclist, a large pickup or even a Pennsylvania black bear. It can happen!

I never felt comfortable turning my head on the E-bike. It is not a terribly fast experience but there is added velocity. I like my head facing forward. I tried using both helmet and handlebar mirrors. They were somewhat acceptable but small and “shaky”.

Formation of the Technical Vision

My sense of the solution is to create a stylized representation of my rear view on a non-distracting display located in the center of the bike handlebar. Something akin to a “colored pencil sketch”. I feel that too much information is just as bad as not enough. Lucky for me, I was privy to some upcoming technology components that could implement what I had envisioned.

This general concept is not new. However, there have been some recent technological developments that make it viable for the bike use case. The biking application has some special requirements that need to be considered, especially when considering an increased forward velocity of a mid-mounted motor e-bike or an even faster rear hub motor model.

There are many options for bike computers. Many have LCD displays to display simple maps and telemetry. In the case of radar technology, you get a simple “dot” on the screen. What I wanted was a better visual representation of the scenario behind me. It is important to state that a high resolution “4k” camera feed is not ideal in my view. Attention must be focused forward. The idea is for technology to filter and present only what matters.

The fundamental technical vision is to detect hazards coming from behind, notify the rider and then present the scene in a simple manner. Most of the detail in the rear scene is not adding information needed for safety purposes. The technology should filter what isn’t important but present enough detail for the neural net in my head to do the rest. Be it a Ford F-150 or Pennsylvania black bear.

This is the essence of BunnyVision. To give your mind the information it needs when it needs it. So you can ride… freely…. and safely.

Rabbits are one of two animals that have visual awareness behind them. I am also confident that once “Bunny” is in your locus of awareness, you will not forget the name.

Concept Hardware and Rider Experience

There are three primary physical components in BunnyVision:

- The imaging system with purpose-built object detection

- The display system that presents the rear scene in a terse, non-distracting manner.

- A haptic feedback system that can cue the ride to view the display.

There are some details that I have purposely left out. Partially because I can’t give away all the secrets just yet. Also, there are still some design decisions yet to be made pending real-world experiences. The first order of business will be to satisfy the base features so we can ride with bunny. Then we can sprinkle on some sugar.

One of the primary objectives of BunnyVision is to present information so a rider can quickly gain situational awareness to their rear. To accomplish this, we will construct a processing pipeline that comprised of a simple object detection model, an edge detection filter, and a low color count shader. From my initial outdoor experiments, this combination was highly effective in providing useful information without being distracted.

Conceptual Display Animations

The concept animations are within the ballpark of how BunnyVision looks on the display. I can’t wait to show the real thing. The output shading (64-color) is constrained by a specialized display technology that I will be discussing later. It turns out the constraint is perfect for the application. There is an aesthetic to the display that is hard to capture. There is much work to be done on this rendering pipeline. Early tests have shown it to be a good balance of processing requirements and fidelity that communicates just the right amount of information without being a distraction.

Technical Note:

These concept animations are provided as animated gif files Some browsers, notably chrome, play them at a reduced frame rate. You can download them to your local machine to view at the intended rate (30FPS).

Riding Free

Hazard Detected

I admit this conceptual naming is a product of my childhood cinema experience in the 1980s and 90s. The key concept is the imaging system detecting a potential hazard to provide additional graphical detail to trigger a notification.

Target Lock

The object detection system will box a potential hazard and provide haptic feedback.

Target Engaged

As the potential hazard comes near, the colorized region uses more of the display to offer a higher fidelity representation. The riding does not have to turn their head to gain an understanding of what the hazard is. A very short glance at the display can provide just the right amount of information for the neural net inside of “your head” to fill in the rest.

Haptic Feedback and Notifications

The notification concept is the most difficult to communicate in prose. Notifications will not be delivered via an audible buzzer, beep, or annoying tone. During my initial evaluation of the existing market, an “beep” was the first thing for my mind to turn off. When combined with a poor object detector, the resultant system was more annoying than helpful. The objective is to do a bit better than the robot from Lost In Space.

What I envision can best be described as a “differential” feedback system. Differential meaning that there will be independent controllable feedback on the left and right handlebars. However, this will not be implemented with a simple “buzz” like you might find in game controller. The goal is too viscerally “feel” the feedback, as if the rider and machine are connected. There will be independent control over intensity , wavelength, and envelope.

I received a compelling demonstration of a similar concept at World Maker Faire 2016 in New York City. I have been waiting for the ideal application for implementation and I think I found it. This concept is the least developed, and the most speculative. However, I believe it is possible to achieve a result that will be a true value add to the experience.

Enabling Technology Components

NXP MCX N947 and eIQ Neutron

Object detection models are not new. There are a wide range of models and training sets available in the public domain to precisely identify objects in a scene and classify behaviors. This use case doesn’t require highly complex models. In fact, quite the opposite. A simple model can solve enough of the problem such that the human brain can do the rest. Using a simpler technology component to do a bandwidth reduction is an ideal use case for the biking application. We don’t need to classify all the possible combinations of automobiles, people, etc. We just need enough to identify what could possibly be a hazard and let the brain do the rest.

A typical approach would be to use a high-end applications processor, running a high-level language, possibly burning energy on the order of watts or 10s of watts. This is completely unnecessary for the biking application when considering cost, design complexity and battery life requirements.

A typical approach would be to use a high-end application processor, running a high-level language, potentially burning energy on the order of Watts or 10’s of Watts. This is completely unnecessary for the biking application when considering cost, design complexity, and battery life requirements.

I was one of the lucky few to get early access to the NXP MCX N series microcontrollers.

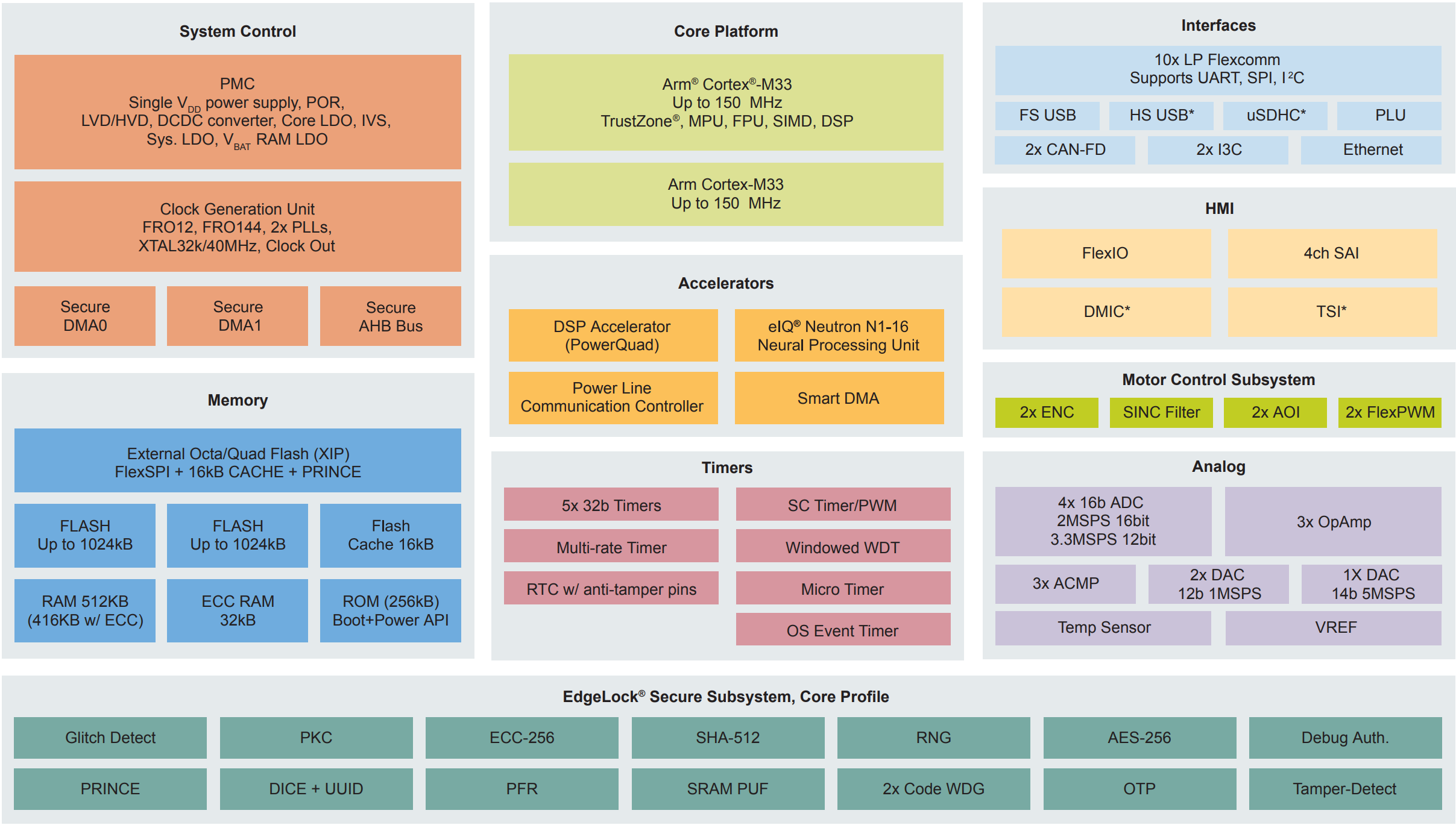

To the right person, a dual-core 150MHz microcontroller can do quite a bit. With the NXP eIQ Neutron Neural Processing Unit (NPU) and the SmartDMA IO coprocessor, an application like BunnyVision becomes possible with a microcontroller. There is the PowerQuad engine and CoolFlux DSP available for accelerating mathematical operations.

When I saw an early version of the “face detect” demo for the for the new MCX N947, I realized that I had a good candidate technology for BunnyVision imaging sensor. It was just enough to implement what I envisioned. With some work on an application specific object detection model, I believe the MCX N947 can do what Is needed within a tiny power envelope. The eIQ Neutron N1-16 is the smallest instantiation of the NPU. This variant represents the entry point of the technology. There will be other offerings in the future for upgrades.

Low Distraction Sunlight Readable Display Technology

An extremely important aspect of BunnyVision is how the visual information will be presented. Routing a camera to high-resolution LCD is not what I had envisioned. The time on the bike is a special place to let my mind work. Yet another “do-everything gadget” would not add more to the experience. My conjecture that this the display should be as low distraction and readable as possible while providing more than a “dot” on a screen.

An extremely important aspect of BunnyVision is how the visual information will be presented. Routing a camera to high-resolution LCD is not what I had envisioned. The time on the bike is a special place to let my mind work. Yet another “do-everything gadget” would not add more to the experience. My conjecture is that the display should be as low distraction and readable as possible while providing more than a “dot” on a screen.

A challenge with LCDs, even with the advanced technologies in your cellular phone, is sunlight readability. This is a critical requirement for a bike application. The brute force approach is to use a display with display with an extremely strong backlight (>1000 Nits). The immediate challenge being battery life. Significant energy is consumed in the backlight. The screen is competing with the giant ball of fire in the sky known as the sun.

E-Ink technology has been useful for tablets and e-readers. However, the refresh rates are not suitable for an application like BunnyVision. I alluded to this early, but I have been aware of some advancement in color reflective displays for some time which helpful push the BunnyVision concept forward. One particular technology known as “Memory-In-Pixel” (MIP) has been available for some time in monochrome format.

Two notable aspects of the MIP technology are pixel density and power consumption. As an example, Sharp Microelectronics manufactures 2.7” monochrome MIP display with a 400x240 resolution. Even though it is monochrome, the visual result is very crisp. Because it is reflective, it looks fabulous in the direction of sunlight. Power consumption is orders of magnitude better than traditional LCD technology.

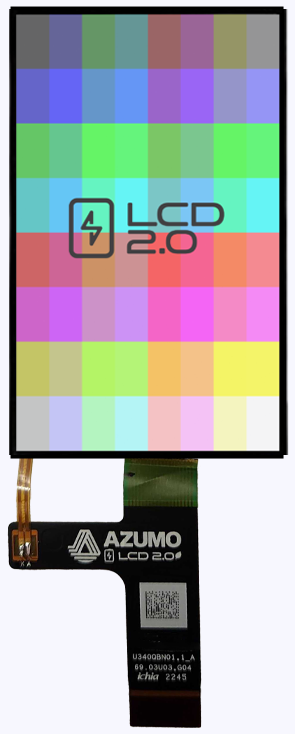

Technology has moved forward in this space and there are now larger format color versions of MIP technology. I had been fortunate to have early access to the Azumo 3.4” 272x451 64-color MIP display in 2023.

The concept animations when presented on this display is absolutely stunning in direct sunlight. The look and feel were nearly a perfect fit for the aesthetic that I had envisioned. I can’t wait to show off the display with live data.

Another unique aspect of the Azumo 3.4” display is the front light technology. Reflective displays look even better as more light hits them. When riding early morning or at dusk, the front light technology can illuminate the display using a fraction of the energy of a traditional backlight. The technology in this display directly translates to improved battery life.

MIP technology has tradeoffs in terms of interface speeds and refresh rates. However, I was able to achieve a “live” refresh rate that is suitable for the stated objectives. There are technical details around using this display for the BunnyVision project that I will share in future chapters of this storyline. The display is a key part to presenting visual data and I wanted to present it early in the project as the Azumo product is compelling in the context of this application.

Moving Forward with BunnyVision

Building useful and purposeful products are delicate interplay between algorithms, software, electronic systems, constraints, and industrial design. BunnyVision is no different. It will be a long journey but worth the effort. Future chapters will include plenty of technical details on display technology, object detection models and the imaging system built around the MCX microcontroller.

I hope I was able to communicate the purpose behind the project. In the end, it will be what pulls us through the difficult parts. I am always on the watch for technological confluences which can solve problems. Especially ones I am passionate about.

Building BunnyVision in 2024 will be quite a ride!